11 Commonly Used Risk Assessment Models for AI

Here are 11 commonly used AI risk assessment models and how they help ensure safe, ethical, and effective systems for AI organizations:

1. NIST AI Risk Management Framework (RMF)

The U.S. National Institute of Standards and Technology (NIST) developed this framework to manage risks associated with AI. The framework offers a structured approach to identifying, assessing, and mitigating AI risks throughout the model lifecycle. It emphasizes four core functions:

Map (contextualize risks)

Measure (evaluate risks)

Manage (implement controls)

Govern (monitor effectiveness)

It's designed to be broad and applicable across disparate industries. NIST RMF is iterative, so you can adapt it to evolving AI deployments and risks.

The framework promotes collaboration by integrating perspectives from data scientists, compliance officers, and business leaders. It also introduces the concept of "trustworthy AI" by explicitly tying model governance to broader ethical goals like privacy and fairness.

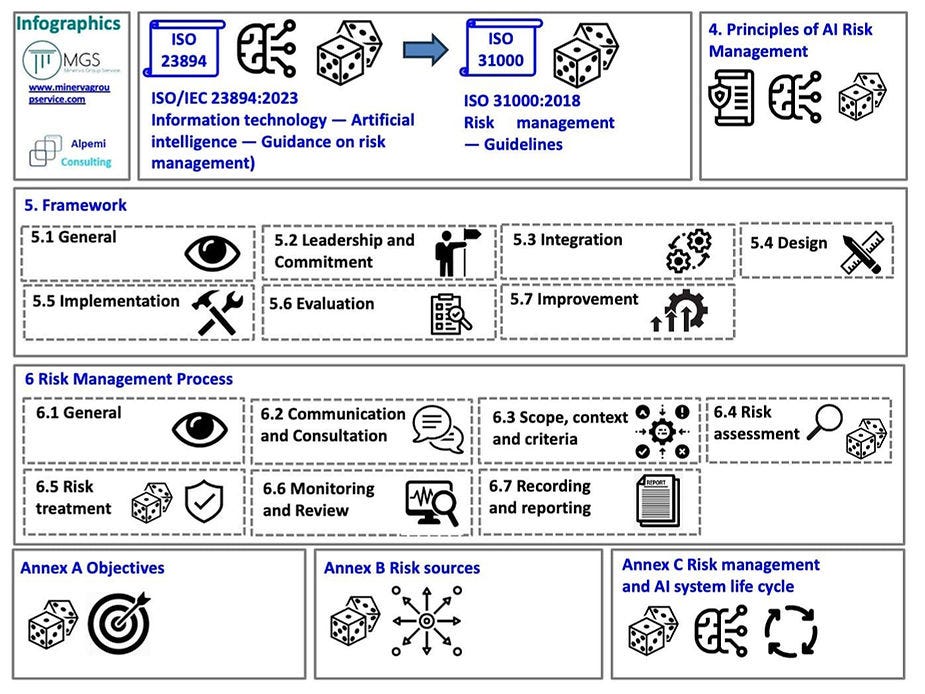

2. ISO/IEC 23894 AI Risk Management

This emerging international standard for AI risk management was developed by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). It uses a lifecycle approach that encourages continuous evaluation from design to deployment.

ISO/IEC 23894 uniquely emphasizes interoperability so that AI systems align with industry standards across borders and sectors. It also addresses the often-overlooked challenges of integrating AI into legacy systems by offering guidance for ensuring system compatibility and operational continuity.

The framework provides detailed advice on human oversight, which makes it particularly useful for high-risk sectors like healthcare and finance. However, it’s important to note that the ISO/IEC 23894 standard is still evolving, and full adoption is not yet widespread.

3. AI Fairness 360 Toolkit

IBM developed this open-source toolkit to assess and mitigate biases in AI models. There are prebuilt metrics and algorithms to evaluate fairness in datasets and model predictions. It supports various bias mitigation techniques, such as re-weighting data or adjusting model outputs.

There are also handy tutorials and interactive tools, so it's user-friendly too. The toolkit is suitable for companies focused on fairness in high-impact areas like hiring or lending. While AI Fairness 360 is often viewed as a toolkit for developers, its value extends to non-technical users, such as compliance officers, who can use its prebuilt fairness metrics to audit AI systems. It includes over 70 fairness metrics and multiple bias mitigation algorithms.

One critical limitation of AI Fairness 360 is its lack of scalability for large datasets or real-time systems, which can impact its applicability.

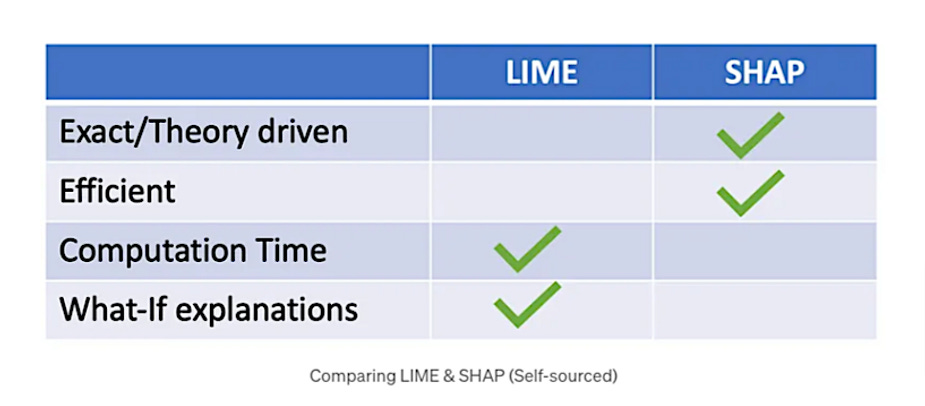

4. LIME (Local Interpretable Model-agnostic Explanations)

Developed by researchers at the University of Washington, LIME provides a method for explaining individual predictions of machine learning models by creating interpretable approximations.

LIME generates simplified, interpretable models (e.g., linear regressions) that approximate the behavior of complex models locally around specific data points. It's model-agnostic, so it's applicable to a wide range of AI systems.

LIME's strength is its adaptability. It's applicable to any model type, including black-box models like neural networks. Its explanations are particularly valuable for debugging so developers can identify anomalies or biases in specific predictions.

The LIME model works best for tabular and textual data and can struggle with high-dimensional or highly non-linear data. It’s best paired with other explainability techniques, as it's focused on local explanations and might miss global model behaviors.

5. SHAP (Shapley Additive explanations)

Introduced first in an academic paper, this method for risk assessment is based on Shapley values from game theory to explain the contributions of each input feature to a model's prediction. SHAP assigns importance scores to each feature by measuring its marginal contribution to predictions.

SHAP is a mathematically robust explanation method that's useful for debugging, model validation, and ensuring transparency in AI apps. SHAP provides both local and global explanations, offering a more holistic understanding of a model's behavior. However, its computational complexity can be a challenge for large-scale models.

While SHAP provides explainability through Shapley values, alternative tools like Citrusˣ enable users to explain model behavior and predictions to various stakeholders, while ensuring models are compliance-ready. It facilitates analysis at global, local, cluster, and segment levels, providing a nuanced understanding of model decisions. This multi-level explainability aids in identifying biases and vulnerabilities and promotes responsible AI deployment.

6. HLEG AI Ethics Guidelines

These ethical guidelines for AI were developed by the European Commission's High-Level Expert Group on Artificial Intelligence (HLEG). This framework is particularly relevant for organizations operating in Europe, providing a foundation for compliance with the upcoming EU AI Act.

HLEG's approach is not so much about technical risk mitigation but also about aligning AI with societal values, such as inclusivity and sustainability.

The guidelines include a practical "Trustworthy AI Assessment List," which helps organizations operationalize abstract ethical principles. They also prioritize "non-discrimination by design," addressing biases before systems are deployed.

7. O-RAN AI Framework

The O-RAN AI framework (by the O-RAN Alliance) outlines methods for integrating AI/ML into telecom networks for reliability, security, and performance. It includes guidelines for validating AI models in real-world network conditions.

O-RAN emphasizes "closed-loop automation," where AI systems continuously adapt to network conditions, reducing human intervention. However, this dynamic nature also introduces risks like real-time decision errors, which O-RAN addresses through rigorous validation and testing protocols.

While it's clearly a niche risk assessment model, it's pivotal for telecom operators deploying AI-driven technologies in highly sensitive infrastructure.

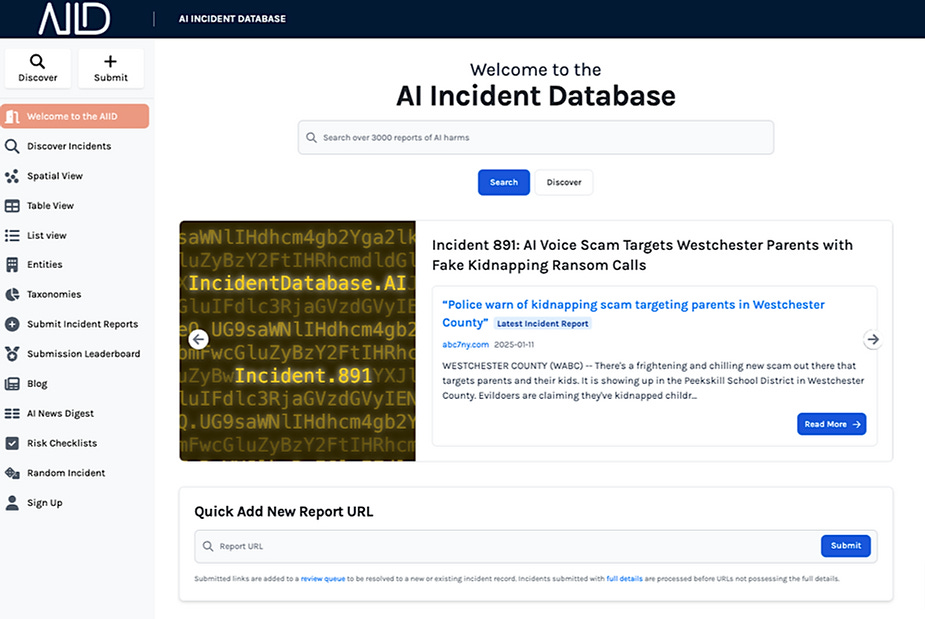

8. AI Incident Database (AIID)

The AIID collects and categorizes incidents where AI systems have caused harm or failed. It uses reports from public submissions, classifies incidents by type, and provides analysis to identify recurring risk patterns. The AIID's value stems from learning from past AI failures and proactively mitigating similar risks in your own systems.

Beyond serving as a database, AIID provides valuable meta-analyses of trends in AI failures, helping organizations identify systemic risks. It includes detailed classifications, such as whether adversarial attacks, algorithmic biases, or data issues caused incidents. You can use this data not just for reactive analysis but as a benchmarking tool to identify vulnerabilities in your systems proactively.

9. FATE Framework (Fairness, Accountability, Transparency, and Ethics)

Developed by research teams like Microsoft's FATE initiative, this is more of a conceptual framework than an operational one, which can limit its direct applicability in embedding ethical principles into AI system design and deployment. There are guidelines for integrating fairness, accountability, transparency, and ethics into AI workflows, from data collection to post-deployment monitoring.

FATE emphasizes stakeholder engagement and encourages diverse input during AI system development to ensure fairness and inclusivity. It also stresses the importance of auditability by urging organizations to document decisions made during model training and deployment.

10. CRISP-DM (Cross-Industry Standard Process for Data Mining)

A standard process model for data mining, CRISP-DM is often adapted for AI model development. The framework ensures that risks are addressed at each stage:

Business understanding

Data understanding

Data Preparation

Modeling

Evaluation

Deployment

CRISP-DM's iterative process has been widely adopted for AI projects, particularly in industries like retail and finance. Its "business understanding" phase ensures alignment between technical efforts and strategic goals to reduce the risk of misaligned AI deployments.

11. ASILOMAR AI Principles

Developed at the Asilomar Conference on Beneficial AI, this is not a technical risk assessment framework but instead a set of broad ethical goals promoting safe and beneficial AI development. Though not a technical framework, these principles influence how organizations shape policies and governance to manage AI risks responsibly.

The principles urge developers to align AI development with broadly beneficial goals. For AI risk assessments, this could mean periodically reviewing AI systems to ensure they still align with the organization's evolving values and goals. It could also mean evaluating the unintended consequences of already deployed models, such as reinforcing systemic inequalities or financial exclusion.

Simplify AI Model Risk Assessment with Citrusˣ

Risk assessment models are essential for evaluating and mitigating potential risks in AI systems, from addressing bias and compliance issues to enhancing transparency and trustworthiness. Adopting these frameworks allows organizations to proactively identify and manage risks while ensuring their AI systems operate safely and ethically.

However, tools like LIME, SHAP, and AI Fairness 360 are computationally expensive for large datasets or real-time systems, and other frameworks have clear limitations. This is where Citrusˣ’s comprehensive, end-to-end platform comes in to run more effectively and cut costs. It simplifies AI model risk assessment by integrating seamlessly across the AI lifecycle. Citrusˣ ensures transparency, compliance, and reliability through automated compliance monitoring and scalable risk assessments across multiple models.

With real-time automated reports, Citrusˣ tracks and explains AI model performance and behavior, enabling swift identification of emerging risks like data drift or bias before they escalate. Its risk mitigation tools empower your team to efficiently address these challenges to safeguard operations and user trust—and establish a foundation for responsible AI.

Book a Citrusˣ demo to see how it simplifies AI risk assessment and ensures compliance.